↧

The Developer Dashboard in SharePoint 2013

↧

Restricting SharePoint Designer access

Overview: I dislike SharePoint Designer (SPD). Depending on your companies/clients SharePoint governance, you can control the use of SPD on you farms. My default position is turn it completely off. SPD can be configured at web application (WA) or Site collection level.

My position is turn off SharePoint designer on your production and UAT farms. If someone needs access they probably should rather do it in dev and package it for deployment or turn on specific access. SharePoint CA can be used to configure how SPD can work on specific Web Apps.

Controlling SPD at a WA level is can be done via PS or using CA. Tip: CA > General Settings > SharePoint Designer > Configure SharEPoint Designer Settings > Select the web app to edit and adjust accordingly.

More Info:

http://blog.ciaops.com/2013/06/disabling-sharepoint-designer-access-in.html

My position is turn off SharePoint designer on your production and UAT farms. If someone needs access they probably should rather do it in dev and package it for deployment or turn on specific access. SharePoint CA can be used to configure how SPD can work on specific Web Apps.

Controlling SPD at a WA level is can be done via PS or using CA. Tip: CA > General Settings > SharePoint Designer > Configure SharEPoint Designer Settings > Select the web app to edit and adjust accordingly.

More Info:

http://blog.ciaops.com/2013/06/disabling-sharepoint-designer-access-in.html

↧

↧

Verify SP 2013 Search Installations

This Script runs PowerShell to check the Search on a farm, it shows where components are configured & working.

There are more details scripts on the web, this is a simple check.

There are more details scripts on the web, this is a simple check.

↧

Planning Suggestion for SharePoint 2013

Overview: It is always a good idea to have an exact breakdown of your SharePoint achitecture. I do this using a diagram and a corresponding spreadsheet. This post has an example of the spreadsheet, I have a tab for each DTAP environment before I build it out.

| Server Name | Server Role | Logical Group | CPU | C | D | RAM | Location | IP | Environment |

| SVR-PR-WFE1 | SharePoint Web Front End | SP WFE | 4 | 90 | 80 | 16 | London | 10.189.10.50 | Production |

| SVR-PR-WFE2 | SharePoint Web Front End | SP WFE | 4 | 90 | 80 | 16 | London | 10.189.10.51 | Production |

| SVR-PR-WFE3 | SharePoint Web Front End | SP WFE | 4 | 90 | 80 | 16 | M | 10.189.10.52 | Production |

| SVR-PR-WFE4 | SharePoint Web Front End | SP WFE | 4 | 90 | 80 | 16 | M | 10.189.10.53 | Production |

| SVR-PR-APP1 | SharePoint Application Server | SP APP | 4 | 90 | 80 | 16 | London | 10.189.10.54 | Production |

| SVR-PR-APP2 | SharePoint Application Server | SP APP | 4 | 90 | 80 | 16 | London | 10.189.10.55 | Production |

| SVR-PR-APP3 | SharePoint Application Server | SP APP | 4 | 90 | 80 | 16 | M | 10.189.10.56 | Production |

| SVR-PR-APP4 | SharePoint Application Server | SP APP | 4 | 90 | 80 | 16 | M | 10.189.10.57 | Production |

| SVR-PR-OWA1 | Office Web Applications | OWA | 8 | 90 | 80 | 16 | London | 10.189.10.58 | Production |

| SVR-PR-OWA2 | Office Web Applications | OWA | 8 | 90 | 80 | 16 | London | 10.189.10.59 | Production |

| SVR-PR-OWA3 | Office Web Applications | OWA | 8 | 90 | 80 | 16 | M | 10.189.10.60 | Production |

| SVR-PR-OWA4 | Office Web Applications | OWA | 8 | 90 | 80 | 16 | M | 10.189.10.61 | Production |

| SVR-PR-WF1 | Workflow Services | SP WF | 4 | 90 | 120 | 8 | London | 10.189.10.62 | Production |

| SVR-PR-WF2 | Workflow Services | SP WF | 4 | 90 | 120 | 8 | M | 10.189.10.63 | Production |

| SVR-PR-SRCH1 | SharePoint Search Type A | Search | 8 | 134 | 80 | 32 | London | 10.189.10.70 | Production |

| SVR-PR-SRCH2 | SharePoint Search Type A | Search | 8 | 134 | 80 | 32 | M | 10.189.10.71 | Production |

| SVR-PR-SRCH3 | SharePoint Search Type B | Search | 8 | 134 | 300 | 24 | London | 10.189.10.72 | Production |

| SVR-PR-SRCH4 | SharePoint Search Type B | Search | 8 | 134 | 300 | 24 | M | 10.189.10.73 | Production |

| SVR-PR-SRCH5 | SharePoint Search Type C | Search | 8 | 134 | 500 | 24 | London | 10.189.10.74 | Production |

| SVR-PR-SRCH6 | SharePoint Search Type C | Search | 8 | 134 | 500 | 24 | M | 10.189.10.75 | Production |

| SVR-PR-SRCH7 | SharePoint Search Type D | Search | 8 | 134 | 500 | 24 | London | 10.189.10.76 | Production |

| SVR-PR-SRCH8 | SharePoint Search Type D | Search | 8 | 134 | 500 | 24 | M | 10.189.10.77 | Production |

| SVR-PR-SRCH9 | SharePoint Search Type D | Search | 8 | 134 | 500 | 24 | London | 10.189.10.78 | Production |

| SVR-PR-SRCH10 | SharePoint Search Type D | Search | 8 | 134 | 500 | 24 | M | 10.189.10.79 | Production |

| SVR-PR-DBS1 | SharePoint Databases | SQL | 16 | 134 | 500 | 32 | London | 10.189.10.85 | Production |

| SVR-PR-DBS2 | SharePoint Databases | SQL | 16 | 134 | 500 | 32 | M | 10.189.10.86 | Production |

| CL-PR-DBS | Cluster | 10.189.10.87 | |||||||

| LS-PR-DBS | Listener | 10.189.10.88 | |||||||

| SVR-PR-DBR1 | SSRS & SSAS Databases | SQL | 8 | 134 | 500 | 32 | London | 10.189.10.89 | Production |

| SVR-PR-DBR2 | SSRS & SSAS Databases | SQL | 8 | 134 | 500 | 32 | M | 10.189.10.90 | Production |

| CL-PR-DBR | Cluster | 10.189.10.91 | |||||||

| LS-PF-SP-DBR | Listener | 10.189.10.92 | |||||||

| SVR-PR-DBA1 | TDS & K2 Databases | SQL | 16 | 134 | 500 | 32 | London | 10.189.10.93 | Production |

| SVR-PR-DBA2 | TDS & K2 Databases | SQL | 16 | 134 | 500 | 32 | M | 10.189.10.94 | Production |

| CL-PR-DBA | Cluster | 10.189.10.95 | |||||||

| LS-PR-DBA | Listener | 10.189.10.96 |

↧

IIS setting for SharePoint 2013

This is a work in progress!

1.> Change the IIS log location for existing websites, this needs to be done on each WFE in your farm, providing you want to change them.

PS Script to Change the IIS log directory for existing web sites.

2.> Disable IIS recycling

1.> Change the IIS log location for existing websites, this needs to be done on each WFE in your farm, providing you want to change them.

PS Script to Change the IIS log directory for existing web sites.

2.> Disable IIS recycling

↧

↧

Office Web App ran into a problem opening this document

Problem: When Opening a word document I get the following error when Office Web Apps tries to render the document "There's a configuration problem preventing us from getting your document. If possible, try opening this document in Microsoft Word."

Initial Hypothesis: Check the ULS logs on the Web Front End (SharePoint Server) as this doesn't tell me much. I found the following issue in my ULS:

WOPI (CheckFile) - Invalid Proof Signature for file SandPit Environment Setup.docx url: http://web-sp2013-uat.demo.dev/Docs/_vti_bin/wopi.ashx/files/6d0f38c0d5554c87a655558da9cedcad?access_token...

Resolution: Run the following PS> Update-SPWOPIProofKey -ServerName "wca-uat.demo.dev"

More Info:

http://technet.microsoft.com/en-us/library/jj219460.aspx

===========================================

Problem: When opening a docx file using WCA (Office Web Apps) I get the following error mes: "Sorry, there was a problem and we can't open this document. If this happens again, try opening the document in Microsoft Word."

I then tried to open an excel document and got the error: "We couldn't find the file you wanted.

Initial Hypothesis: Checked the ULS logs on the only OWA server and found this unexpected error:

HttpRequestAsync, (WOPICheckFile,WACSERVER) no response [WebExceptionStatus:ConnectFailure, url:http://webuat.demo.dev/_vti_bin/wopi.ashx/...

This appears to be a networking related issue, I have a NLB (KEMP) and I am using a wildcard certificate on the WCA adr with SSL termination.

Resolution: The error message tells me that it can't get back to the SharePoint WFE servers from the OWA server. The request from the WCA/OWA1 server back to the SP front end server is not done using https but http. I have an issue as my nlb can't deal with traffic on port 80.

I add a host entry on my OWA1 server so that traffic to the SharePoint WFE goes directly to a server by IP and it works. This means i don't have high availaibilty. A NLB service dealing with the web application on port 80 will fix my issue.

==========================================

Problem: The above issue was temporairly fixed by adding a host entry on the WCA1 server so that using the url of the web application on port 80 would direct the user back to WFE1. I turned on WCA2 and WFE2 so I now have 2 SharePoint Web front ends & A 2 server WCA farm. In my testing I have docs, doc and excel files. From multiple locations I could open and edit the docx and doc files but opening the excel file gave me this issue: "Couldn't Open the Workbook

We're sorry. We couldn't open your workbook. You can try to open this file again, sometimes that helps."

Initial Hypothesis: Documents are cached and the internal balancing seemed to make word document available using office web apps. I assume the requests are coming out the cache or from OWA that has the host entry. I need to tell OWA where to go via a host entry or NLB entry. Note: using a host entry won't make the OWA highly available/redunadant. This is the same issue as mentioned in the problem above.

Resolution: I added a host entry to the WCA2 server, it points to the WFE1 machine.

==========================================

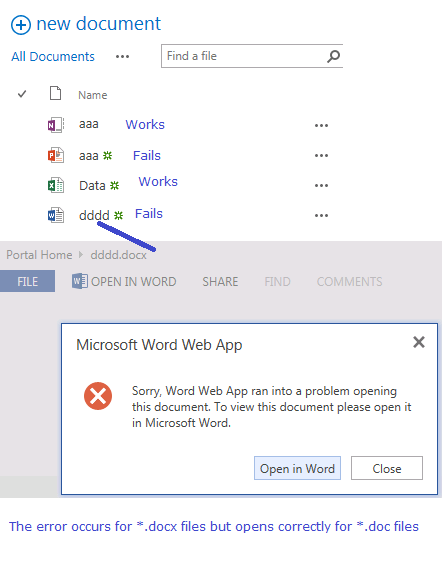

Problem: Opening docx or pptx files in Office Web Apps 2013 results in the error "Sorry, Word Web App ran into a problem opening this document. To view this document please open it in Microsoft Word."

![]()

Resolution: I don't like it but I had to remove the link to the WCA farm, rebuild the WCA farm and hoop SP2013 back to the WCA farm. [sic]. Other documents were opening and I realised that my bindings were incorrect after I rebuilt.

Initial Hypothesis: Check the ULS logs on the Web Front End (SharePoint Server) as this doesn't tell me much. I found the following issue in my ULS:

WOPI (CheckFile) - Invalid Proof Signature for file SandPit Environment Setup.docx url: http://web-sp2013-uat.demo.dev/Docs/_vti_bin/wopi.ashx/files/6d0f38c0d5554c87a655558da9cedcad?access_token...

Resolution: Run the following PS> Update-SPWOPIProofKey -ServerName "wca-uat.demo.dev"

More Info:

http://technet.microsoft.com/en-us/library/jj219460.aspx

===========================================

Problem: When opening a docx file using WCA (Office Web Apps) I get the following error mes: "Sorry, there was a problem and we can't open this document. If this happens again, try opening the document in Microsoft Word."

I then tried to open an excel document and got the error: "We couldn't find the file you wanted.

It's possible the file was renamed, moved or deleted."

Initial Hypothesis: Checked the ULS logs on the only OWA server and found this unexpected error:

HttpRequestAsync, (WOPICheckFile,WACSERVER) no response [WebExceptionStatus:ConnectFailure, url:http://webuat.demo.dev/_vti_bin/wopi.ashx/...

This appears to be a networking related issue, I have a NLB (KEMP) and I am using a wildcard certificate on the WCA adr with SSL termination.

Resolution: The error message tells me that it can't get back to the SharePoint WFE servers from the OWA server. The request from the WCA/OWA1 server back to the SP front end server is not done using https but http. I have an issue as my nlb can't deal with traffic on port 80.

I add a host entry on my OWA1 server so that traffic to the SharePoint WFE goes directly to a server by IP and it works. This means i don't have high availaibilty. A NLB service dealing with the web application on port 80 will fix my issue.

|

| The OWA Server need to access the web app on port 80. My NLB stopped all traffic on port 80. |

==========================================

Problem: The above issue was temporairly fixed by adding a host entry on the WCA1 server so that using the url of the web application on port 80 would direct the user back to WFE1. I turned on WCA2 and WFE2 so I now have 2 SharePoint Web front ends & A 2 server WCA farm. In my testing I have docs, doc and excel files. From multiple locations I could open and edit the docx and doc files but opening the excel file gave me this issue: "Couldn't Open the Workbook

We're sorry. We couldn't open your workbook. You can try to open this file again, sometimes that helps."

Initial Hypothesis: Documents are cached and the internal balancing seemed to make word document available using office web apps. I assume the requests are coming out the cache or from OWA that has the host entry. I need to tell OWA where to go via a host entry or NLB entry. Note: using a host entry won't make the OWA highly available/redunadant. This is the same issue as mentioned in the problem above.

Resolution: I added a host entry to the WCA2 server, it points to the WFE1 machine.

==========================================

Problem: Opening docx or pptx files in Office Web Apps 2013 results in the error "Sorry, Word Web App ran into a problem opening this document. To view this document please open it in Microsoft Word."

Resolution: I don't like it but I had to remove the link to the WCA farm, rebuild the WCA farm and hoop SP2013 back to the WCA farm. [sic]. Other documents were opening and I realised that my bindings were incorrect after I rebuilt.

↧

SP 2013 SSRS failing after RBS enabled and disabled

Problem: I have SSRS (SharePoint mode) enabled on my SharePoint 2013 farm using SQL 2012 which was working, I enabled AvePoint's RBS provider on the farm and enabled RBS on 1 out of 2 web applications. I then disabled RBS on the web applications and assumed all was good, RBS stopped working and threw 1 of 2 errors on the RBS enabled then disabled web application:

"For more information about this error navigate to the report server on the local server machine, or enable remote errors" or ....

Note: Rdl in the system before RBS was enabled work, during RBS don't work and rdl's added after disabling RBS both fail

Initial Hypothesis/Error tracking:

1.> SSRS and WCA errors unfortunately don't get correlationId's, so I turned off all the SSRS SSA instances except 1 so I know which server to find the error on in ULS log.

2.> I ran the request for the report (rdl, this report just displays a label so I know it is good) again so the error is captured in the ULS log.

3.> I painfully open the latest log using ULS viewer and scan for errors and I find:

System.Data.SqlClient.SqlException (0x80131904): The EXECUTE permission was denied on the object 'rbs_fn_get_blob_reference', database 'SP_Content_PaulXX', schema 'mssqlrbs'.

at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection, Action`1 wrapCloseInAction)

4.> Now I am going nuts as RBS has been disabled and I decide to trace the request in SQL Profiler, I can't find the call in SQL Server profiler and while looking for it I realise the function is not in the database. I also find a post suggesting changing permissions but as I don't have the function, permissions isn't my issue.

5.> I start looking at RBS on the farm using PowerShell PS>

cls

$cdbs=Get-SPContentDatabase

foreach ($cdb in $cdbs)

{

$rbs=$cdb.RemoteBlobStorageSettings

Write-Host "Content DB:" $cdb.Name

Write-Host "Enabled:" $rbs.Enabled

}

I notice that the content database 'SP_Content_PaulXX'mentioned in the ULS log has the RemoteBlobStorageEnabled flag set to true.

Resolution:

*************************************************

Problem: I have SP2013 + SP2012, I am using SSRS in SP mode. My app pool accounts for my web app and my SSRS SSA are different and on seperate servers. So for this to occure, you need SP2013, SSRS, RBS Enabled (or the Content database still thinks RBS is enabled), additionally the service account used by the SSRS SSA needs to have minimal permisions. Existing rdl files display correctly however any rdl files added throw an exception. The diagram below further explains the scenarion:

Initial Hypothesis: Trawling through the ULS logs show the error: System.Data.SqlClient.SqlException (0x80131904): The EXECUTE permission was denied on the object 'rbs_fn_get_blob_reference', database...

A snippet of the ULS is shown below:

"For more information about this error navigate to the report server on the local server machine, or enable remote errors" or ....

Note: Rdl in the system before RBS was enabled work, during RBS don't work and rdl's added after disabling RBS both fail

Initial Hypothesis/Error tracking:

1.> SSRS and WCA errors unfortunately don't get correlationId's, so I turned off all the SSRS SSA instances except 1 so I know which server to find the error on in ULS log.

2.> I ran the request for the report (rdl, this report just displays a label so I know it is good) again so the error is captured in the ULS log.

3.> I painfully open the latest log using ULS viewer and scan for errors and I find:

System.Data.SqlClient.SqlException (0x80131904): The EXECUTE permission was denied on the object 'rbs_fn_get_blob_reference', database 'SP_Content_PaulXX', schema 'mssqlrbs'.

at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection, Action`1 wrapCloseInAction)

4.> Now I am going nuts as RBS has been disabled and I decide to trace the request in SQL Profiler, I can't find the call in SQL Server profiler and while looking for it I realise the function is not in the database. I also find a post suggesting changing permissions but as I don't have the function, permissions isn't my issue.

5.> I start looking at RBS on the farm using PowerShell PS>

cls

$cdbs=Get-SPContentDatabase

foreach ($cdb in $cdbs)

{

$rbs=$cdb.RemoteBlobStorageSettings

Write-Host "Content DB:" $cdb.Name

Write-Host "Enabled:" $rbs.Enabled

}

I notice that the content database 'SP_Content_PaulXX'mentioned in the ULS log has the RemoteBlobStorageEnabled flag set to true.

Resolution:

*************************************************

Problem: I have SP2013 + SP2012, I am using SSRS in SP mode. My app pool accounts for my web app and my SSRS SSA are different and on seperate servers. So for this to occure, you need SP2013, SSRS, RBS Enabled (or the Content database still thinks RBS is enabled), additionally the service account used by the SSRS SSA needs to have minimal permisions. Existing rdl files display correctly however any rdl files added throw an exception. The diagram below further explains the scenarion:

Initial Hypothesis: Trawling through the ULS logs show the error: System.Data.SqlClient.SqlException (0x80131904): The EXECUTE permission was denied on the object 'rbs_fn_get_blob_reference', database...

A snippet of the ULS is shown below:

Resolution: The app pool account used by the SSRS Service Application needs to have permissions to run the function.

1.> Figure out the app pool account used by the SSRS SSA if you don't know it as shown below:

2.> Give the SP_Services account permissions over the erroring execution calls. To prove it give the account dbo rights.

Thanks to Sam Keytel and Mark Oburoh for look at this.

More Info:

↧

CU Upgrading On Prem Office Web Apps 2013

Problem: I need to upgrade my WCA farm from the RTM version to the March 2013 CU to allow pdf's to be displayed with Office Web Apps.

Steps to upgrade an Exisitn WCA farm:

You can verify the version of you WCA farm using (not sure this reports the correct version):

Steps to upgrade an Exisitn WCA farm:

- Copy exe to machine: OWA1 & OWA2 (D:\OfficeWebApps\March 2013 CU)

- Remove secondary servers from farm. In my case this is WCA2, remote into SP-WCA2 and run PS> Remove-OfficeWebAppsMachine (on WCA2)

- Run exe on secondaries (WCA2), Reboot, shut down and snapshot & then on the primary (WCA1)

- Check primary: verify the url works: http://wcauat.demo.dev/hosting/discovery this can be done on the local machine or using https from a client machine.

- Create a new OWAFarm, this will run over the top of your exisitng farm PS> . missing Add-OfficeWebAppsFarm... (on WCA1)

- Join Secondary to farm PS> new-officewebappsmachine –machinetojoin “sp-wca1”(Primary)

- Activate WordPDF PS> New-SPWopibinding –servername “wcauat.gstt.nhs.uk” –application “WordPDF” -allowhttp

More Info:

You can verify the version of you WCA farm using (not sure this reports the correct version):

(Invoke-WebRequesthttps://wcauat.demo.devjsonAnonymous/BroadcastPing).Headers["X-OfficeVersion"]↧

Understanding SQL backups on SharePoint databases using the Full recovery mode

Overview: This post looks at reducing the footprint of the ldf file. SharePoint related databases with using the Full recovery mode keep all transaction sice the last "differential". It explains how SQL is affect by backups such as SQL backups, SP backups and 3rd party backup tools (both SP backup and SQL backup tools).

This post does not discuss why all your databases are in full recovery mode or at competing backup products. It also contains steps to truncate and then shrink the size of the transaction log.

Note: Shrink a ldf file for it to regrow each week/cycle is bad practive. The only time to shrink is when the log has unused transactions that are already covered by backups.

Background:

1.> Determine which databases are suitable candidates for shrinking the log file.

DBCC SQLPERF(LOGSPACE);

2.> Perform a Full backup,

3.> Next perform a transaction log backup and truncate the database.

4.> Run DBCC ShrinkFile as shown below (please remember to leave growth so the ldf file is not growing, keep extra room in the ldf - this should be used to reduce the size log file that has grown way to far).

USE AutoSP_Config;

GO

DBCC SHRINKFILE (AutoSP_Config_log, 100);

GO

5.> Verify the ldf file has reduced in size.

This post does not discuss why all your databases are in full recovery mode or at competing backup products. It also contains steps to truncate and then shrink the size of the transaction log.

Note: Shrink a ldf file for it to regrow each week/cycle is bad practive. The only time to shrink is when the log has unused transactions that are already covered by backups.

Background:

- Using the full recovery model allows you to restore to a specific point in time.

- A Full backup is refered to as your "base of the differential".

- A "copy-only" backup cannot be used as a "base of the differential", this becomes important when there are multiple providers backing up SQL databases.

- After a full backup is taken, "differentials" differential backups can be taken. Differentials are all changes to your database since the last "base of the differential". They are cumulative so obviously they grow bigger for each subsequent differential backup until a new "base of the differential" is taken.

- To restore, you need the "base of the differential" (last full backup) and the latest "differential" backup.

- You can also backup the transaction logs (in effect the ldf). These need to be restored in sequentital order, so you need all log file backups.

- If you still have your database you can produce the "tail-log" backup this will allow you to restore to any point in time.

- Every backup get a "Log Sequence Number" (LSN), this allows the restore to make a chain of backup for restore purposes. This chain can be broken using 3rd party tools or switching the database in the simple recovery mode.

- A confusing set of terminology is "Shrinking" and "Truncating" that are closely related. You may notice an ldf file has got extremely large, if you are performing full backups on a scheduled basis this is a good size to keep the ldf at. You don't want to grow ldf files on the fly as it is extremely resource intensive. However say your log file has not been purging/removing transactions within a cycle, then you may have a completely oversized ldf file. In this scenario, you want to perform a full backup and truncate your logs. This remove committed transactions but the unused records are marked a available to be used again. You can now perform a "shrink" to reduce the size of the ldf fileagain, you don't want ldf's growing every cycle so don't schedule the shrinking.

- "Truncating" is marking committed transactions in the lfd as "free" or available for the writing new transactions to.

- "Shrinking" will reduce the physical size of the ldf. Shrinking can reclaim space from the "free" space in the ldf.

1.> Determine which databases are suitable candidates for shrinking the log file.

DBCC SQLPERF(LOGSPACE);

3.> Next perform a transaction log backup and truncate the database.

4.> Run DBCC ShrinkFile as shown below (please remember to leave growth so the ldf file is not growing, keep extra room in the ldf - this should be used to reduce the size log file that has grown way to far).

USE AutoSP_Config;

GO

DBCC SHRINKFILE (AutoSP_Config_log, 100);

GO

5.> Verify the ldf file has reduced in size.

↧

↧

Search stops working and CA Search screens error

Problem: In my redundant Search farm, search falls over. It was working, nothing appears to of changed but it suddenly stops working. This problem has caused itself to surface in several places:

1.> In CA going to "Search Administration" displays the following error message: Search Application Topology - Unable to retrieve topology component health states. This may be because the admin component is not up and running.

2.> Query and crawl stopped working.

3.> Using PowerShell I can't get the status of the Search Service Application

PS> $srchSSA = Get-SPEnterpriseSearchServiceApplication

PS> Get-SPEnterpriseSearchStatus -SearchApplication $srchSSA

Error: Get-SPEnterpriseSearchStatus : Failed to connect to system manager. SystemManagerLocations: net.tcp://sp2013-srch2/CD8E71/AdminComponent2/Management

4.> In the "Search Administration" page within CA, if I click "Content Sources" I get the error message: Sorry, something went wrong

The search application 'ef5552-7c93-4555-89ed-cd8f1555a96b' on server SP2013-SRCH2 did not finish loading. View the event logs on the affected server for more information.

I used PowerShell to get the ULS logs for the correlation Id returned on the screen via the CA error message

PS> Merge-SPLogFile -Path "d:\error.log" -Correlation "ef109872-7c93-4e6c-89ed-cd8f14bda96b" Out shows..

Logging Correlation Data Medium Name=Request (GET:http://sp2013-app1:2013/_admin/search/listcontentsources.aspx?appid=b555d269%255577%2D430d%2D80aa%2D30d55556dc57)

Authentication Authorization Medium Non-OAuth request. IsAuthenticated=True, UserIdentityName=, ClaimsCount=0

Logging Correlation Data Medium Site=/ 05555e9c-5555-555a-69e2-3f65555be9f4

Topology Medium WcfSendRequest: RemoteAddress: 'https://sp2013-srch2:32844/8c2468a555594301abf555ac41a555b0/SearchAdmin.svc' Channel: 'Microsoft.Office.Server.Search.Administration.ISearchApplicationAdminWebService' Action: 'http://tempuri.org/ISearchApplicationAdminWebService/GetVersion' MessageId:

General Medium Application error when access /_admin/search/listcontentsources.aspx, Error=The search application 'ef155572-7555-4e6c-89ed-cd8f14bda96b' on server SP2013-SRCH2 did not finish loading. View the event logs on the affected server for more information. Server stack trace: at System.ServiceModel.Channels.ServiceChannel.ThrowIfFaultUnderstood(Message reply, MessageFault fault, String action, MessageVersion version, FaultConverter faultConverter) at System.ServiceModel.Channels.ServiceChannel.HandleReply(ProxyOperationRuntime operation, ProxyRpc& rpc) at System.ServiceModel.Channels.ServiceChannel.Call(String action, Boolean oneway, ProxyOperationRuntime operation, Ob General Medium ...(IMethodCallMessage methodCall, ProxyOperationRuntime operation) at System.ServiceModel.Channels.ServiceChannelProxy.Invoke(IMessage message) Exception rethrown at [0]: at Microsoft.Office.Server.Search.Internal.UI.SearchCentralAdminPageBase.ErrorHandler(Object sender, EventArgs e) at Microsoft.Office.Server.Search.Internal.UI.SearchCentralAdminPageBase.OnError(EventArgs e) at System.Web.UI.Page.HandleError(Exception e) at System.Web.UI.Page.ProcessRequestMain(Boolean includeStagesBeforeAsyncPoint, Boolean includeStagesAfterAsyncPoint) at System.Web.UI.Page.ProcessRequest(Boolean includeStagesBeforeAsyncPoint, Boolean includeStagesAfterAsyncPoint)

General Medium ...em.Web.HttpApplication.CallHandlerExecutionStep.System.Web.HttpApplication.IExecutionStep.Execute() at System.Web.HttpApplication.ExecuteStep(IExecutionStep step, Boolean& completedSynchronously) 05b5559c-4215-0555-69e2-3f656555e9f4 Runtime tkau Unexpected System.ServiceModel.FaultException`1[[System.ServiceModel.ExceptionDetail, System.ServiceModel, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089]]: The search application 'ef109555-7c93-444c-85555-cd8f14b5556b' on server SP2013-SRCH2 did not finish loading. View the event logs on the affected server for more information. Server stack trace: at System.ServiceModel.Channels.ServiceChannel.ThrowIfFaultUnderstood(Message reply, MessageFault fault, String action, MessageVersion version, FaultConverter faultConverter) at System.ServiceModel.Channels.ServiceChannel.HandleReply(ProxyOperationRuntime operation, ProxyRpc& rpc)

Runtime tkau Unexpected ...s, TimeSpan timeout) at System.ServiceModel.Channels.ServiceChannelProxy.InvokeService(IMethodCallMessage methodCall, ProxyOperationRuntime operation) at System.ServiceModel.Channels.ServiceChannelProxy.Invoke(IMessage message) Exception rethrown at [0]: at Microsoft.Office.Server.Search.Internal.UI.SearchCentralAdminPageBase.ErrorHandler(Object sender, EventArgs e) at Microsoft.Office.Server.Search.Internal.UI.SearchCentralAdminPageBase.OnError(EventArgs e) at System.Web.UI.Page.HandleError(Exception e) at System.Web.UI.Page.ProcessRequestMain(Boolean includeStagesBeforeAsyncPoint, Boolean includeStagesAfterAsyncPoint)

Runtime tkau Unexpected ...ProcessRequest() at System.Web.UI.Page.ProcessRequest(HttpContext context) at System.Web.HttpApplication.CallHandlerExecutionStep.System.Web.HttpApplication.IExecutionStep.Execute() at System.Web.HttpApplication.ExecuteStep(IExecutionStep step, Boolean& completedSynchronously

General ajlz0 High Getting Error Message for Exception System.ServiceModel.FaultException`1[System.ServiceModel.ExceptionDetail]: The search application 'ef10-555-a96b' on server SP2013-SRCH2 did not finish loading. View the event logs on the affected server for more information. (Fault Detail is equal to An ExceptionDetail, likely created by IncludeExceptionDetailInFaults=true, whose value is: System.Runtime.InteropServices.COMException: The search application '7c93-555c-89ed-cd8f555da6b' on server SP2013-SRCH2 did not finish loading. View the event logs on the affected server for more information. at icrosoft.Office.Server.Search.Administration.SearchApi.RunOnServer[T]General ajlz0 High ... at Microsoft.Office.Server.Search.Administration.SearchApi..ctor(String applicationName) at Microsoft.Office.Server.Search.Administration.SearchAdminWebServiceApplication.GetVersion() at SyncInvokeGetVersion(Object , Object[] , Object[] ) at System.ServiceModel.Dispatcher.SyncMethodInvoker.Invoke(Object instance, Object[] inputs, Object[]& outputs) at System.ServiceModel.Dispatcher.DispatchOperationRuntime.InvokeBegin(MessageRpc& rpc) at System.ServiceModel.Dispatcher.ImmutableDispatchRuntime.ProcessMess...) Micro Trace uls4 Medium Micro Trace Tags: 0 nasq,5 agb9s,52 e5mc,21 8nca,0 tkau,0 ajlz0,0 aat87 05b16e9c-4215-00ba-69e2-3f656eabe9f4

Monitoring b4ly Medium Leaving Monitored Scope (Request (GET:http://sp2013-app1:2013/_admin/search/listcontentsources.aspx?appid=be28d269%255577%2D430d%2D555a%2D30d55556dc57)). Execution Time=99.45349199409

Topology e5mb Medium WcfReceiveRequest: LocalAddress: 'https://sp2013-srch2.demo.local:32844/8c2468a555943555bf42cac555f05b0/SearchAdmin.svc' Channel: 'System.ServiceModel.Channels.ServiceChannel' Action: 'http://tempuri.org/ISearchApplicationAdminWebService/GetVersion' MessageId: 'urn:uuid:c2d55565-bb59-4555-b34b-6555ef1d79a5'

I can see my Admin component on SP2013-SRCH2 is not working, so I turned off the machine hoping it would resolve to the other admin component, it did not change and keeps giving me the same log errors. I turned the Server back on and reviewed the event log on the admin component Server (SP2013-SRCH2). Following errors occured:

Application Server Administration job failed for service instance Microsoft.Office.Server.Search.Administration.SearchServiceInstance + Reason: The device is not ready.

The Execute method of job definition Microsoft.Office.Server.Search.Administration.IndexingScheduleJobDefinition + The search application + on server SP2013-SRCH2 did not finish loading.

Examining the Windows application event logs on SP2013-SRCH2 and I noticed event log errors relating to permissions:

A database error occurred. Source: .Net SqlClient Data Provider Code: 229 occurred 0 time(s) Description: Error ordinal: 1 Message: The EXECUTE permission was denied on the object 'proc_MSS_GetConfigurationProperty', database 'SP_Search'

Unable to read lease from database - SystemManager, System.Data.SqlClient.SqlException (0x80131904): The EXECUTE permission was denied on the object 'proc_MSS_GetLease', database 'SP_Search', schema 'dbo'.

The event log showed me the account trying to execute these stored procs. It was my Search service account e.g. demo\sp_searchservice

Initial Hypothesis: It looks like the Admin search service is not starting or failing over. By tracing the permissions I can see the demo\SP_SearchService account no longer has execute SP permissions on the SP_Search database.

By opening the SP_Search database and looking at effective permissions I can see the "SPSearchDBadmin" role has "effective" permissions over the failing stored procs (Proc_Mss_GetConfigurationProperty).

If I look at the account calling the Stored Proc (demo\SP_SearchService), I can see it is not assigned to the role. This examination leads me to my conjecture that the management tool or someone/something on the farm has caused the permissions to be changed

Resolution: Change the permissions on the database. Give minimal permissions so go to the database and give the service account (demo\sp_SearchService) SPSearchDBAdmin role permissions.

All the issues recorded in the problem statement were working withing 5 minutes on my farm with the issue.

Note: I have a UAT environment in exact sync with my PR environment; UAT has the correct permissions in place already.

1.> In CA going to "Search Administration" displays the following error message: Search Application Topology - Unable to retrieve topology component health states. This may be because the admin component is not up and running.

2.> Query and crawl stopped working.

3.> Using PowerShell I can't get the status of the Search Service Application

PS> $srchSSA = Get-SPEnterpriseSearchServiceApplication

PS> Get-SPEnterpriseSearchStatus -SearchApplication $srchSSA

Error: Get-SPEnterpriseSearchStatus : Failed to connect to system manager. SystemManagerLocations: net.tcp://sp2013-srch2/CD8E71/AdminComponent2/Management

4.> In the "Search Administration" page within CA, if I click "Content Sources" I get the error message: Sorry, something went wrong

The search application 'ef5552-7c93-4555-89ed-cd8f1555a96b' on server SP2013-SRCH2 did not finish loading. View the event logs on the affected server for more information.

I used PowerShell to get the ULS logs for the correlation Id returned on the screen via the CA error message

PS> Merge-SPLogFile -Path "d:\error.log" -Correlation "ef109872-7c93-4e6c-89ed-cd8f14bda96b" Out shows..

Logging Correlation Data Medium Name=Request (GET:http://sp2013-app1:2013/_admin/search/listcontentsources.aspx?appid=b555d269%255577%2D430d%2D80aa%2D30d55556dc57)

Authentication Authorization Medium Non-OAuth request. IsAuthenticated=True, UserIdentityName=, ClaimsCount=0

Logging Correlation Data Medium Site=/ 05555e9c-5555-555a-69e2-3f65555be9f4

Topology Medium WcfSendRequest: RemoteAddress: 'https://sp2013-srch2:32844/8c2468a555594301abf555ac41a555b0/SearchAdmin.svc' Channel: 'Microsoft.Office.Server.Search.Administration.ISearchApplicationAdminWebService' Action: 'http://tempuri.org/ISearchApplicationAdminWebService/GetVersion' MessageId:

General Medium Application error when access /_admin/search/listcontentsources.aspx, Error=The search application 'ef155572-7555-4e6c-89ed-cd8f14bda96b' on server SP2013-SRCH2 did not finish loading. View the event logs on the affected server for more information. Server stack trace: at System.ServiceModel.Channels.ServiceChannel.ThrowIfFaultUnderstood(Message reply, MessageFault fault, String action, MessageVersion version, FaultConverter faultConverter) at System.ServiceModel.Channels.ServiceChannel.HandleReply(ProxyOperationRuntime operation, ProxyRpc& rpc) at System.ServiceModel.Channels.ServiceChannel.Call(String action, Boolean oneway, ProxyOperationRuntime operation, Ob General Medium ...(IMethodCallMessage methodCall, ProxyOperationRuntime operation) at System.ServiceModel.Channels.ServiceChannelProxy.Invoke(IMessage message) Exception rethrown at [0]: at Microsoft.Office.Server.Search.Internal.UI.SearchCentralAdminPageBase.ErrorHandler(Object sender, EventArgs e) at Microsoft.Office.Server.Search.Internal.UI.SearchCentralAdminPageBase.OnError(EventArgs e) at System.Web.UI.Page.HandleError(Exception e) at System.Web.UI.Page.ProcessRequestMain(Boolean includeStagesBeforeAsyncPoint, Boolean includeStagesAfterAsyncPoint) at System.Web.UI.Page.ProcessRequest(Boolean includeStagesBeforeAsyncPoint, Boolean includeStagesAfterAsyncPoint)

General Medium ...em.Web.HttpApplication.CallHandlerExecutionStep.System.Web.HttpApplication.IExecutionStep.Execute() at System.Web.HttpApplication.ExecuteStep(IExecutionStep step, Boolean& completedSynchronously) 05b5559c-4215-0555-69e2-3f656555e9f4 Runtime tkau Unexpected System.ServiceModel.FaultException`1[[System.ServiceModel.ExceptionDetail, System.ServiceModel, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089]]: The search application 'ef109555-7c93-444c-85555-cd8f14b5556b' on server SP2013-SRCH2 did not finish loading. View the event logs on the affected server for more information. Server stack trace: at System.ServiceModel.Channels.ServiceChannel.ThrowIfFaultUnderstood(Message reply, MessageFault fault, String action, MessageVersion version, FaultConverter faultConverter) at System.ServiceModel.Channels.ServiceChannel.HandleReply(ProxyOperationRuntime operation, ProxyRpc& rpc)

Runtime tkau Unexpected ...s, TimeSpan timeout) at System.ServiceModel.Channels.ServiceChannelProxy.InvokeService(IMethodCallMessage methodCall, ProxyOperationRuntime operation) at System.ServiceModel.Channels.ServiceChannelProxy.Invoke(IMessage message) Exception rethrown at [0]: at Microsoft.Office.Server.Search.Internal.UI.SearchCentralAdminPageBase.ErrorHandler(Object sender, EventArgs e) at Microsoft.Office.Server.Search.Internal.UI.SearchCentralAdminPageBase.OnError(EventArgs e) at System.Web.UI.Page.HandleError(Exception e) at System.Web.UI.Page.ProcessRequestMain(Boolean includeStagesBeforeAsyncPoint, Boolean includeStagesAfterAsyncPoint)

Runtime tkau Unexpected ...ProcessRequest() at System.Web.UI.Page.ProcessRequest(HttpContext context) at System.Web.HttpApplication.CallHandlerExecutionStep.System.Web.HttpApplication.IExecutionStep.Execute() at System.Web.HttpApplication.ExecuteStep(IExecutionStep step, Boolean& completedSynchronously

General ajlz0 High Getting Error Message for Exception System.ServiceModel.FaultException`1[System.ServiceModel.ExceptionDetail]: The search application 'ef10-555-a96b' on server SP2013-SRCH2 did not finish loading. View the event logs on the affected server for more information. (Fault Detail is equal to An ExceptionDetail, likely created by IncludeExceptionDetailInFaults=true, whose value is: System.Runtime.InteropServices.COMException: The search application '7c93-555c-89ed-cd8f555da6b' on server SP2013-SRCH2 did not finish loading. View the event logs on the affected server for more information. at icrosoft.Office.Server.Search.Administration.SearchApi.RunOnServer[T]General ajlz0 High ... at Microsoft.Office.Server.Search.Administration.SearchApi..ctor(String applicationName) at Microsoft.Office.Server.Search.Administration.SearchAdminWebServiceApplication.GetVersion() at SyncInvokeGetVersion(Object , Object[] , Object[] ) at System.ServiceModel.Dispatcher.SyncMethodInvoker.Invoke(Object instance, Object[] inputs, Object[]& outputs) at System.ServiceModel.Dispatcher.DispatchOperationRuntime.InvokeBegin(MessageRpc& rpc) at System.ServiceModel.Dispatcher.ImmutableDispatchRuntime.ProcessMess...) Micro Trace uls4 Medium Micro Trace Tags: 0 nasq,5 agb9s,52 e5mc,21 8nca,0 tkau,0 ajlz0,0 aat87 05b16e9c-4215-00ba-69e2-3f656eabe9f4

Monitoring b4ly Medium Leaving Monitored Scope (Request (GET:http://sp2013-app1:2013/_admin/search/listcontentsources.aspx?appid=be28d269%255577%2D430d%2D555a%2D30d55556dc57)). Execution Time=99.45349199409

Topology e5mb Medium WcfReceiveRequest: LocalAddress: 'https://sp2013-srch2.demo.local:32844/8c2468a555943555bf42cac555f05b0/SearchAdmin.svc' Channel: 'System.ServiceModel.Channels.ServiceChannel' Action: 'http://tempuri.org/ISearchApplicationAdminWebService/GetVersion' MessageId: 'urn:uuid:c2d55565-bb59-4555-b34b-6555ef1d79a5'

I can see my Admin component on SP2013-SRCH2 is not working, so I turned off the machine hoping it would resolve to the other admin component, it did not change and keeps giving me the same log errors. I turned the Server back on and reviewed the event log on the admin component Server (SP2013-SRCH2). Following errors occured:

Application Server Administration job failed for service instance Microsoft.Office.Server.Search.Administration.SearchServiceInstance + Reason: The device is not ready.

The Execute method of job definition Microsoft.Office.Server.Search.Administration.IndexingScheduleJobDefinition + The search application + on server SP2013-SRCH2 did not finish loading.

Examining the Windows application event logs on SP2013-SRCH2 and I noticed event log errors relating to permissions:

A database error occurred. Source: .Net SqlClient Data Provider Code: 229 occurred 0 time(s) Description: Error ordinal: 1 Message: The EXECUTE permission was denied on the object 'proc_MSS_GetConfigurationProperty', database 'SP_Search'

Unable to read lease from database - SystemManager, System.Data.SqlClient.SqlException (0x80131904): The EXECUTE permission was denied on the object 'proc_MSS_GetLease', database 'SP_Search', schema 'dbo'.

The event log showed me the account trying to execute these stored procs. It was my Search service account e.g. demo\sp_searchservice

Initial Hypothesis: It looks like the Admin search service is not starting or failing over. By tracing the permissions I can see the demo\SP_SearchService account no longer has execute SP permissions on the SP_Search database.

By opening the SP_Search database and looking at effective permissions I can see the "SPSearchDBadmin" role has "effective" permissions over the failing stored procs (Proc_Mss_GetConfigurationProperty).

If I look at the account calling the Stored Proc (demo\SP_SearchService), I can see it is not assigned to the role. This examination leads me to my conjecture that the management tool or someone/something on the farm has caused the permissions to be changed

Resolution: Change the permissions on the database. Give minimal permissions so go to the database and give the service account (demo\sp_SearchService) SPSearchDBAdmin role permissions.

All the issues recorded in the problem statement were working withing 5 minutes on my farm with the issue.

Note: I have a UAT environment in exact sync with my PR environment; UAT has the correct permissions in place already.

↧

SharePoint 2013 Search Limits with an example

Overview: This post aims to provide guidelines for building SharePoint 2013 Search farms. There are 6 Search components (labelled C1-C6 below) and 4 database types (labelled DB1-DB4). Index partitions are a big factor is search planning.

Example: Throughout this post I provide an example of a 60 million item search farm with redundancy/High Availability (HA).

Index partitions: Add 1 index partition per 10 million items is the MS recommendation, this really depends on IOPs and how the query is used. An twinned partition (partition column) is needed for HA, this will improve query time over a single partition.

Example: So assuming a max of 10 million items per index, to have a HA farm for 30 million items requires 6 partitions.

Index component (C1): 2 index components for each partition.

Example: 12 index components.

Query component (C2): Use 2 query processing components for HA/redundancy, add an additional 2 query components at 80 million items increase.

Example: 2 Query components.

Crawl database (DB1): Use 1 crawl database per 20 million items. This is probably the most commonly overlooked item in search farms. The crawl database contains tracking and historical information about the crawled items. It also contains info such as the last crawl id, time etc, crawl history. Crawl component feeds into the crawl database. Medium usage should be under 100GB. Add more content database before 20 million or 100GB database size.

Example: 3 crawl databases at 20 million items each allows for a search farm containing 60 million items.

Link database (DB2): Use 1 link database per 60 million items. I believe 1 link database will handle up to 100 million items.

Example: 1 link database.

Analytics reporting database (DB3): Add 1 search analytics reports database for each 500,000 unique items, viewed each day or every 10-20 million total items. This is the heavy search database. Add a new database to keep each Analytics reporting database under +-250GB.

Example: Start with 1 and grow as needed.

Analytics Processing Component (C3):

Content Processing Component (C4): processes crawled items and moves the item data to the index component. It's function is to parses documents, performs property mapping and entity extraction, perform language processing, and ultimately moves crawled items into indexed items.

Example: 4 Content Processing components.

Admin component (C5): Use 1 administration components or 2 search for redundancy/HA. For all farm sizes.

Example:2 Admin components.

Admin database (DB4): Low usage, even in big farms, you only need 1 database. Should stay well under 100GB.

Example: 1 Admin database.

CrawlComponent (C6): The crawl component crawls content sources and delivers crawled items including metadata to the Content Processing component. In SP2013 you don't specify the relationship between the crawl database and the crawl component. The crawl component will distribute to all available crawl databases. The 3 types of crawls available in SP2013 are: Full, Incremental and Continuous (only works for SP2013 content). Schema changes still require a full crawl to pickup the change in SP2013. Crawl does not do as much analysis as was the case in SP2010 so it is a much lighter/faster process.

Example:2 Crawl components allows for HA and improved performance

Database Hardware: for the example use 8CPUs, 16GB of Ram, disk size depends on content but it is smaller than SP2010.

Placing components on VMs for the example:

Group your search roles onto servers:

More Info:

Troubleshooting Crawl

Example: Throughout this post I provide an example of a 60 million item search farm with redundancy/High Availability (HA).

Index partitions: Add 1 index partition per 10 million items is the MS recommendation, this really depends on IOPs and how the query is used. An twinned partition (partition column) is needed for HA, this will improve query time over a single partition.

Example: So assuming a max of 10 million items per index, to have a HA farm for 30 million items requires 6 partitions.

Index component (C1): 2 index components for each partition.

Example: 12 index components.

Query component (C2): Use 2 query processing components for HA/redundancy, add an additional 2 query components at 80 million items increase.

Example: 2 Query components.

Crawl database (DB1): Use 1 crawl database per 20 million items. This is probably the most commonly overlooked item in search farms. The crawl database contains tracking and historical information about the crawled items. It also contains info such as the last crawl id, time etc, crawl history. Crawl component feeds into the crawl database. Medium usage should be under 100GB. Add more content database before 20 million or 100GB database size.

Example: 3 crawl databases at 20 million items each allows for a search farm containing 60 million items.

Link database (DB2): Use 1 link database per 60 million items. I believe 1 link database will handle up to 100 million items.

Example: 1 link database.

Analytics reporting database (DB3): Add 1 search analytics reports database for each 500,000 unique items, viewed each day or every 10-20 million total items. This is the heavy search database. Add a new database to keep each Analytics reporting database under +-250GB.

Example: Start with 1 and grow as needed.

Analytics Processing Component (C3):

Content Processing Component (C4): processes crawled items and moves the item data to the index component. It's function is to parses documents, performs property mapping and entity extraction, perform language processing, and ultimately moves crawled items into indexed items.

Example: 4 Content Processing components.

Admin component (C5): Use 1 administration components or 2 search for redundancy/HA. For all farm sizes.

Example:2 Admin components.

Admin database (DB4): Low usage, even in big farms, you only need 1 database. Should stay well under 100GB.

Example: 1 Admin database.

CrawlComponent (C6): The crawl component crawls content sources and delivers crawled items including metadata to the Content Processing component. In SP2013 you don't specify the relationship between the crawl database and the crawl component. The crawl component will distribute to all available crawl databases. The 3 types of crawls available in SP2013 are: Full, Incremental and Continuous (only works for SP2013 content). Schema changes still require a full crawl to pickup the change in SP2013. Crawl does not do as much analysis as was the case in SP2010 so it is a much lighter/faster process.

Example:2 Crawl components allows for HA and improved performance

Database Hardware: for the example use 8CPUs, 16GB of Ram, disk size depends on content but it is smaller than SP2010.

Placing components on VMs for the example:

Group your search roles onto servers:

- Index & Query Processing

- Analytics & Content Processing

- Crawl, Content processing & Search Admin

More Info:

Troubleshooting Crawl

↧

DevOps and SharePoint

Components of DevOps

There are different levels of maturity and your final goal will depend on your company. Operations departments are very different in a bank as opposed to Facebook.

The 4 main stages to DevOps Maturity are:

Presentation at SharePoint Saturday 2013 on of Infrastructure as Code (IaC)

http://blog.sharepointsite.co.uk/2013/11/iac-presentation-for-sharepoint.html

- IT Automation

- Agile Development

- Operation and dev teams working together

- Service vitalisation

- Continuous releases

- Automated testing (Recorded Web UI & Unit testings)

- Performance

Why DevOps

- Collaboration btwn Dev & Ops teams; reduce unknowns early on, info easily available, better decision making, improved governance.

- Increase apps faster; faster time to market

- Improve App quality; environments available and not tedious to deploy

- Reduce costs; fewer staff required as tasks are automated

There are different levels of maturity and your final goal will depend on your company. Operations departments are very different in a bank as opposed to Facebook.

The 4 main stages to DevOps Maturity are:

- Early - Manual traditional approach to software delivery. Little or no automation.

- Scripted - Moving toward more automation. Some scripting is used to assist the tracked recorded deployment process thru DTAP environments.

- Automation - Reusable common automation approach to releasing applications. Workflow/orchestration using the automation capabilities used over all the DTAP environments.

- Continuous Delivery - end-to-end application delivery process from dev to production environments. Probably includes Continuous Integration, nightly builds

Presentation at SharePoint Saturday 2013 on of Infrastructure as Code (IaC)

http://blog.sharepointsite.co.uk/2013/11/iac-presentation-for-sharepoint.html

↧

Remote Debugging SharePoint 2013

Overview: Object reference not set to an instance of an object is all the information I have in my customers ULS logs. I need to debug an application in production that does not contain Visual Studio 2012.

My Steps:

..... Finish when time permits

More Info:

http://msdn.microsoft.com/en-us/library/ff649389.aspx

http://www.dotnetmafia.com/blogs/dotnettipoftheday/archive/2008/03/05/how-to-remote-debugging-a-web-application.aspx

My Steps:

- Ran the Remote Debugguer (msvsmon.exe) on 1 of the production servers

- Added a host file to point to my production server "SP-WFE1" (optional depending on where the web requests originate from)

- Opened Visual Studio 2012 on a VM containing the projected (hooked up to TFS 2012) and opened the solution (.sln).

- Select Tools > Attach to Process

..... Finish when time permits

More Info:

http://msdn.microsoft.com/en-us/library/ff649389.aspx

http://www.dotnetmafia.com/blogs/dotnettipoftheday/archive/2008/03/05/how-to-remote-debugging-a-web-application.aspx

↧

↧

Adding Additional Search Crawl database to a SP2013 Search farm

Problem: By adhereing to Microsoft recommendations for search farms, a threshold that is passed fairly quickly is that the Search Crawl database should not exceed 20 million items per database. I have a 12 server search farm with four partitions (recommendation 10 million items per index partion). I need to add a second Crawler DB.

Initial Hypothesis: Figure out your current crawl databases.

Add additional Search Crawl databases

Review the existing crawl search databases

I proved this on a test system by resetting my index and recrawling, the results after adding 3 content db's are shown below.

Resolution: Add more crawl database to the search service application.

$SSA =Get-SPEnterpriseSearchServiceApplication$searchCrawlDBName ="SP_Search_CrawlStore2"

$searchCrawlDBServer ="SP2013-SQL3" # SQL Aliase could be the Conn str

$crawlDatabase =New-SPEnterpriseSearchCrawlDatabase-SearchApplication$SSA-DatabaseName$searchCrawlDBName-DatabaseServer$searchCrawlDBServer

$crawlStoresManager =new-ObjectMicrosoft.Office.Server.Search.Administration.CrawlStorePartitionManager($SSA)

$crawlStoresManager.BeginCrawlStoreRebalancing()

cls$SSA =Get-SPEnterpriseSearchServiceApplication

$crawlStoresManager =new-ObjectMicrosoft.Office.Server.Search.Administration.CrawlStorePartitionManager($SSA)

Write-Host "CrawlStoresAreUnbalanced:"$crawlStoresManager.CrawlStoresAreUnbalanced()Write-Host "CrawlStoreImbalanceThreshold:"$ssa.GetProperty("CrawlStoreImbalanceThreshold")Write-Host "CrawlStoresAreUnbalanced:"$crawlStoresManager.CrawlStoresAreUnbalanced()Write-Host "CrawlPartitionSplitThreshold:"$ssa.GetProperty("CrawlPartitionSplitThreshold")

$crawlLog =New-ObjectMicrosoft.Office.Server.Search.Administration.CrawlLog$SSA

$dbInfo=$crawlLog.GetCrawlDatabaseInfo()Write-Host "Number of Crawl Databases:"$dbInfo.Count$dbInfo.Values

More Info:

http://blogs.msdn.com/b/sharepoint_strategery/archive/2013/01/28/powershell-to-rebalance-crawl-store-dbs-in-sp2013.aspx

Initial Hypothesis: Figure out your current crawl databases.

Add additional Search Crawl databases

Review the existing crawl search databases

I proved this on a test system by resetting my index and recrawling, the results after adding 3 content db's are shown below.

Resolution: Add more crawl database to the search service application.

$SSA =Get-SPEnterpriseSearchServiceApplication$searchCrawlDBName ="SP_Search_CrawlStore2"

$searchCrawlDBServer ="SP2013-SQL3" # SQL Aliase could be the Conn str

$crawlDatabase =New-SPEnterpriseSearchCrawlDatabase-SearchApplication$SSA-DatabaseName$searchCrawlDBName-DatabaseServer$searchCrawlDBServer

$crawlStoresManager =new-ObjectMicrosoft.Office.Server.Search.Administration.CrawlStorePartitionManager($SSA)

$crawlStoresManager.BeginCrawlStoreRebalancing()

Powershell to see what is going on:

cls$SSA =Get-SPEnterpriseSearchServiceApplication

$crawlStoresManager =new-ObjectMicrosoft.Office.Server.Search.Administration.CrawlStorePartitionManager($SSA)

Write-Host "CrawlStoresAreUnbalanced:"$crawlStoresManager.CrawlStoresAreUnbalanced()Write-Host "CrawlStoreImbalanceThreshold:"$ssa.GetProperty("CrawlStoreImbalanceThreshold")Write-Host "CrawlStoresAreUnbalanced:"$crawlStoresManager.CrawlStoresAreUnbalanced()Write-Host "CrawlPartitionSplitThreshold:"$ssa.GetProperty("CrawlPartitionSplitThreshold")

$crawlLog =New-ObjectMicrosoft.Office.Server.Search.Administration.CrawlLog$SSA

$dbInfo=$crawlLog.GetCrawlDatabaseInfo()Write-Host "Number of Crawl Databases:"$dbInfo.Count$dbInfo.Values

More Info:

http://blogs.msdn.com/b/sharepoint_strategery/archive/2013/01/28/powershell-to-rebalance-crawl-store-dbs-in-sp2013.aspx

↧

Audit and documenting a SharePoint on prem farm

Overview: I have had and done several SharePoint infrastructure audits over the past few years. These can vary from running through checklists of best practices to see what the customer setup has and finding out why they may of choosen to diverge.

There is a lot of good tooling and checklists to help you audit your farm such as the built in Health analyser, central admin, Powershell scripts and a great tool to help document your farm SPDocKitSetup from Acceleratio.

Tooling:

SharePoint:

SharePoint Logs - I put these into my d drive

Multiple install accounts

List all customisations espcially wsp and custom code

External Access - DMZ, network,

IIS:

Change the IIS default log location to a separate disk

Reclaim old IIS logs (Backup and remove from WFE's)

SQL basic checks:

I/O, CPU, Memory on the base database machine/s

MaxDop = 1

Initital mdf sizing and ldf sizing

ldf on fast disk, mdf of content db's on slowest disk 9or just put it all on fast disk)

Backups

Maybe Reminders:

Remove all certificate files that are not needed (*.cer & *.pfx)

Ensure local account user name/passwords are changed & secure (I have a local admin account on my Windows template used to create all my VM's ensure this account is disabled or secured).

More Info:

http://nikpatel.net/2012/03/11/checklist-for-designing-and-implementing-sharepoint-2010-extranets-things-to-consider/

https://habaneroconsulting.com/insights/Do-you-need-a-SharePoint-infrastructure-audit

http://blog.muhimbi.com/2009/05/managing-sharepoints-audit.html

http://www.portalint.com/thoughts-on-sharepoint-audit/

There is a lot of good tooling and checklists to help you audit your farm such as the built in Health analyser, central admin, Powershell scripts and a great tool to help document your farm SPDocKitSetup from Acceleratio.

Tooling:

- PowerShell

- Health anayser

- Central Admin

- ULS logs and event logs

- IIS

- Fiddler

- SQL Management Studio, SQL profiler

- Tool to audit and document your SharePoint farm: SPDocKitSetup

- Third party tools like: DocAve and Metalogix (Ideara had a decent tool previously) can help audit and document your system.

- Document the farms topology;

- Verify versions and components installed;

- Compare MS recommendations to the farm settings, provide findings and allow the customer time to explain why these setting differ; and

- Moitoring, troubleshooting and performance.

SharePoint:

SharePoint Logs - I put these into my d drive

Multiple install accounts

List all customisations espcially wsp and custom code

External Access - DMZ, network,

IIS:

Change the IIS default log location to a separate disk

Reclaim old IIS logs (Backup and remove from WFE's)

SQL basic checks:

I/O, CPU, Memory on the base database machine/s

MaxDop = 1

Initital mdf sizing and ldf sizing

ldf on fast disk, mdf of content db's on slowest disk 9or just put it all on fast disk)

Backups

Maybe Reminders:

Remove all certificate files that are not needed (*.cer & *.pfx)

Ensure local account user name/passwords are changed & secure (I have a local admin account on my Windows template used to create all my VM's ensure this account is disabled or secured).

More Info:

http://nikpatel.net/2012/03/11/checklist-for-designing-and-implementing-sharepoint-2010-extranets-things-to-consider/

https://habaneroconsulting.com/insights/Do-you-need-a-SharePoint-infrastructure-audit

http://blog.muhimbi.com/2009/05/managing-sharepoints-audit.html

http://www.portalint.com/thoughts-on-sharepoint-audit/

↧

EventLog Error Fix

Overview: After building my farms I trawl through the ULS and event logs to look for logs messages to identify any issues.

Problem: My event log shows a Windows/IIS error whereby the IIS sites application pool uses a service account that does not have a user profile on the machine. The error message reads "Event Id: 1511 Windows cannot find the local profile and is logging you on with a temporary profile. Changes you make to this profile will be lost when you log off."

Verify the issue:

Resolution: (IEDaddy's post gave me the resolution)

1.> Stop the processes that use the account (I stopped the web sites that used the application pool account "demo\OD_Srv")

2.> cmd prompt> net localgroup administrators demo\OD_Srv /add

3.> cmd prompt> runas /u:demo\OD_Srv /profile cmd

4.> in the new cmd prompt run > echo %userprofile%

5.> Check the user profiles and verify the profile store for the account (demo\OD_Srv) has a status of "Local"

6.> Remove the account from the local administrators group ie cmd> net localgroup administrators demo\OD_Srv /delete

More Info:

http://www.brainlitter.com/2010/06/08/how-to-resolve-event-id-1511windows-cannot-find-the-local-profile-on-windows-server-2008/

http://todd-carter.com/post/2010/05/03/give-your-application-pool-accounts-a-profile/

Problem: My event log shows a Windows/IIS error whereby the IIS sites application pool uses a service account that does not have a user profile on the machine. The error message reads "Event Id: 1511 Windows cannot find the local profile and is logging you on with a temporary profile. Changes you make to this profile will be lost when you log off."

Verify the issue:

Resolution: (IEDaddy's post gave me the resolution)

1.> Stop the processes that use the account (I stopped the web sites that used the application pool account "demo\OD_Srv")

2.> cmd prompt> net localgroup administrators demo\OD_Srv /add

3.> cmd prompt> runas /u:demo\OD_Srv /profile cmd

4.> in the new cmd prompt run > echo %userprofile%

5.> Check the user profiles and verify the profile store for the account (demo\OD_Srv) has a status of "Local"

6.> Remove the account from the local administrators group ie cmd> net localgroup administrators demo\OD_Srv /delete

More Info:

http://www.brainlitter.com/2010/06/08/how-to-resolve-event-id-1511windows-cannot-find-the-local-profile-on-windows-server-2008/

http://todd-carter.com/post/2010/05/03/give-your-application-pool-accounts-a-profile/

↧

Capturing data for SharePoint

I got an email from an old school friend that heard I may do some SharePoint stuff.

"I need your advice on a Sharepoint question. We have a client that need users to capture forms and the ablity to create new forms on the fly, does this sound possible?"

My dashed off reply is below - comments are welcome

On the SharePoint thing, this is the deal with forms. InfoPath was the standard for creating web forms for SharePoint, saying that about 3 weeks ago, MS announce it is no longer the product of choice and it will not be support after 10 years. It really comes down to how hectic the requirment is where you want you data stored.

SharePoint out of the box allows for users to create lists, this are not too complex and the logic is generally pretty simple. It works really well if your requirment is simple web forms, lots of them and not relational data. All native, very little training but customising the default look and functionality gets expensive real quick (inject custom JS), there is also a tool SharePoint designer that can be used to customes the forms. When you create a list the CRUD forms are all created for the list.

InfoPath - tool to draw forms custom logic, lots of issues when it get complicated but if you need a lot of forms fast and need some logic this is still a good option.

K2 and Ninetex have forms engines, I have used smartforms from K2,. For forms for workflows and building complex forms this is a good option but more if you have a dedicated forms team/guy. If you told me you need 1000 forms with complex logic and more forms need to be added in time, there is workflow and you will perminantly have dedicate form requirments this is a good option but be careful it is not as easy as folks may make out.

Pdf share forms work with SP, so if your client has pdf forms - make sure you look at this. I've never used this approach buit it seems plausable.

Custom options, you can build and deply aspx pages, slow but good for customisation. Good option if you have a unique complex requirements (think a drawing tool) as basically you have full C# control. You can also create web apps and consume them in SharePoint.

"I need your advice on a Sharepoint question. We have a client that need users to capture forms and the ablity to create new forms on the fly, does this sound possible?"

My dashed off reply is below - comments are welcome

On the SharePoint thing, this is the deal with forms. InfoPath was the standard for creating web forms for SharePoint, saying that about 3 weeks ago, MS announce it is no longer the product of choice and it will not be support after 10 years. It really comes down to how hectic the requirment is where you want you data stored.

SharePoint out of the box allows for users to create lists, this are not too complex and the logic is generally pretty simple. It works really well if your requirment is simple web forms, lots of them and not relational data. All native, very little training but customising the default look and functionality gets expensive real quick (inject custom JS), there is also a tool SharePoint designer that can be used to customes the forms. When you create a list the CRUD forms are all created for the list.

InfoPath - tool to draw forms custom logic, lots of issues when it get complicated but if you need a lot of forms fast and need some logic this is still a good option.

K2 and Ninetex have forms engines, I have used smartforms from K2,

Pdf share forms work with SP, so if your client has pdf forms - make sure you look at this. I've never used this approach buit it seems plausable.

Custom options, you can build and deply aspx pages, slow but good for customisation. Good option if you have a unique complex requirements (think a drawing tool) as basically you have full C# control. You can also create web apps and consume them in SharePoint.

With what I think your skill sets is at .., it is probably also worth looking at using MVC or creating the forms in .NET code (webforms), then display using iFrame or the new app model in SP2013. You secure the app using claims based auth/OpenId/OAuth.

Those are your basic options. Send me some more detail and I can try give you a clearer match.

Also see:

http://go.limeleap.com/community/bid/286331/Forms-in-SharePoint-Seven-Ways-to-Create-a-Form-in-SharePoint

http://blogs.office.com/2013/03/04/options-to-create-forms-in-sharepoint-2013/#comments

Also see:

http://go.limeleap.com/community/bid/286331/Forms-in-SharePoint-Seven-Ways-to-Create-a-Form-in-SharePoint

http://blogs.office.com/2013/03/04/options-to-create-forms-in-sharepoint-2013/#comments

↧

↧

Installing CU1 for SharePoint 2013

Overview: I need to upgrade from SP2013 CU June 2013 to SP2013 SP1.

Tip: SP1 does not require the March 2013 PU to be installed. In my situation it was already installed.

Steps:

1.> Check there are no upgrades pending.

2.> Run the SP1 upgrade on each machine in the farm containing the SP binaries.

3.> Ensure the Upgrade is required PS>get-spserver $env:computername).NeedsUpgrade

if True on all SP machines (can also verify on a large farm using CA as shown below) then

4.> PS> psconfig.exe -cmd upgrade -inplace b2b -force (This will upgrade the SharePoint databases and update the binaries on the 1st machine).

5.> Run psconfig on all the remaining SharePoint servers in the farm.

Result: The farm should upgrade, my dev farms upgrade however my UAT and Prodcution farms did not complete the upgrade, the fix is shown below.

More Info:

http://blogs.msdn.com/b/sambetts/archive/2013/08/22/sharepoint-farm-patching-explained.aspx

***************

Problem: The Usage and Health database cannot be in an AOAG when upgrading.

ERR Failed to upgrade SharePoint Products.

An exception of type System.Data.SqlClient.SqlException was thrown. Additional exception information: The operation cannot be performed on database "SP_UsageAndHealth" because it is involved in a database mirroring session or an availability group. Some operations are not allowed on a database that is participating in a database mirroring session or in an availability group.

ALTER DATABASE statement failed.

System.Data.SqlClient.SqlException (0x80131904): The operation cannot be performed on database "SP_UsageAndHealth" because it is involved in a database mirroring session or an availability group. Some operations are not allowed on a database that is participating in a database mirroring session or in an availability group.

ALTER DATABASE statement failed.

at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection, Action`1 wrapCloseInAction)

at System.Data.SqlClient.TdsParser.ThrowExceptionAndWarning(TdsParserStateObject stateObj, Boolean callerHasConnectionLock, Boolean asyncClose)

at System.Data.SqlClient.TdsParser.TryRun(RunBehavior runBehavior, SqlCommand cmdHandler,

Tip: Any CU, PU or SP will not perform the upgrade if the Usage and Health SharePoint database is a AOAG database. You need to remove the db and perform the upgrade.

Initial Hypothesis: The error message is pretty clear that the problem is the UsageandHealth database can't be modified in the upgrade process if it is part of the availability group. I use an aliase so I could repoint the aliase to the primary database do the upgrade and then update the SQL aliase back to point to the listerner or the approach I use is to remove the AOAG listener for the usage database, perform the upgrade to SP and readd the AOAG for the HealthandUsage database.

Resolution:

1.> "Remove the UsageAndHealth database from the Availability Group",

2.> Perform the SP1 upgrade

3.> Change the Recovery model to "FULL" and perform a Full backup.

4.> Add the database back in as part of the availability group.

***************

Problem: When running PSConfig to upgrade my SP2013 farm to include SP1, the upgrade fails and the PSConfigDiagnostic log informs me of the problem:

WRN Unable to create a Service Connection Point in the current Active Directory domain. Verify that the SharePoint container exists in the current domain and that you have rights to write to it.

Microsoft.SharePoint.SPException: The object LDAP://CN=Microsoft SharePoint Products,CN=System,DC=demo,DC=dev doesn't exist in the directory.

at Microsoft.SharePoint.Administration.SPServiceConnectionPoint.Ensure(String serviceBindingInformation)

at Microsoft.SharePoint.PostSetupConfiguration.UpgradeTask.Run()

Tip: SP1 does not require the March 2013 PU to be installed. In my situation it was already installed.

Steps:

1.> Check there are no upgrades pending.

2.> Run the SP1 upgrade on each machine in the farm containing the SP binaries.

3.> Ensure the Upgrade is required PS>get-spserver $env:computername).NeedsUpgrade

if True on all SP machines (can also verify on a large farm using CA as shown below) then

4.> PS> psconfig.exe -cmd upgrade -inplace b2b -force (This will upgrade the SharePoint databases and update the binaries on the 1st machine).

5.> Run psconfig on all the remaining SharePoint servers in the farm.

Result: The farm should upgrade, my dev farms upgrade however my UAT and Prodcution farms did not complete the upgrade, the fix is shown below.

More Info:

http://blogs.msdn.com/b/sambetts/archive/2013/08/22/sharepoint-farm-patching-explained.aspx

***************

Problem: The Usage and Health database cannot be in an AOAG when upgrading.

ERR Failed to upgrade SharePoint Products.

An exception of type System.Data.SqlClient.SqlException was thrown. Additional exception information: The operation cannot be performed on database "SP_UsageAndHealth" because it is involved in a database mirroring session or an availability group. Some operations are not allowed on a database that is participating in a database mirroring session or in an availability group.

ALTER DATABASE statement failed.

System.Data.SqlClient.SqlException (0x80131904): The operation cannot be performed on database "SP_UsageAndHealth" because it is involved in a database mirroring session or an availability group. Some operations are not allowed on a database that is participating in a database mirroring session or in an availability group.

ALTER DATABASE statement failed.

at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection, Action`1 wrapCloseInAction)

at System.Data.SqlClient.TdsParser.ThrowExceptionAndWarning(TdsParserStateObject stateObj, Boolean callerHasConnectionLock, Boolean asyncClose)

at System.Data.SqlClient.TdsParser.TryRun(RunBehavior runBehavior, SqlCommand cmdHandler,

Tip: Any CU, PU or SP will not perform the upgrade if the Usage and Health SharePoint database is a AOAG database. You need to remove the db and perform the upgrade.

Initial Hypothesis: The error message is pretty clear that the problem is the UsageandHealth database can't be modified in the upgrade process if it is part of the availability group. I use an aliase so I could repoint the aliase to the primary database do the upgrade and then update the SQL aliase back to point to the listerner or the approach I use is to remove the AOAG listener for the usage database, perform the upgrade to SP and readd the AOAG for the HealthandUsage database.

Resolution:

1.> "Remove the UsageAndHealth database from the Availability Group",

2.> Perform the SP1 upgrade

3.> Change the Recovery model to "FULL" and perform a Full backup.

4.> Add the database back in as part of the availability group.

***************

Problem: When running PSConfig to upgrade my SP2013 farm to include SP1, the upgrade fails and the PSConfigDiagnostic log informs me of the problem:

WRN Unable to create a Service Connection Point in the current Active Directory domain. Verify that the SharePoint container exists in the current domain and that you have rights to write to it.

Microsoft.SharePoint.SPException: The object LDAP://CN=Microsoft SharePoint Products,CN=System,DC=demo,DC=dev doesn't exist in the directory.

at Microsoft.SharePoint.Administration.SPServiceConnectionPoint.Ensure(String serviceBindingInformation)

at Microsoft.SharePoint.PostSetupConfiguration.UpgradeTask.Run()

More Info

http://onpointwithsharepoint.blogspot.co.uk/2013/06/configuring-service-connection-points.html

http://sharepointfreshman.wordpress.com/2012/03/02/1-failed-to-add-the-service-connection-point-for-this-farm/

http://gallery.technet.microsoft.com/ScriptCenter/af31bded-f33f-4c38-a4e8-eaa2fab1c459/

http://blogs.technet.com/b/balasm/archive/2012/05/18/configuration-wizard-failed-microsoft-sharepoint-spexception-the-object-ldap-cn-microsoft-sharepoint-products-cn-system-dc-contoso-dc-com-dc-au-doesn-t-exist-in-the-directory.aspx

↧

SharePoint Online Random Tips

1.> SPO and PowerShell:

To connect and use SharePoint online you cannot have a small business plan (P). The Medium and enterprise Office 365 plans allow the SPO PowerShell scripts to run (very few so far).